Adjust Inference Smoothing

This section explains how to test and optimize your trained model using the Inference Smoothing feature in Reality AI Tools. Inference smoothing improves model stability by averaging prediction outcomes over a specified range, reducing noise in real-time predictions.

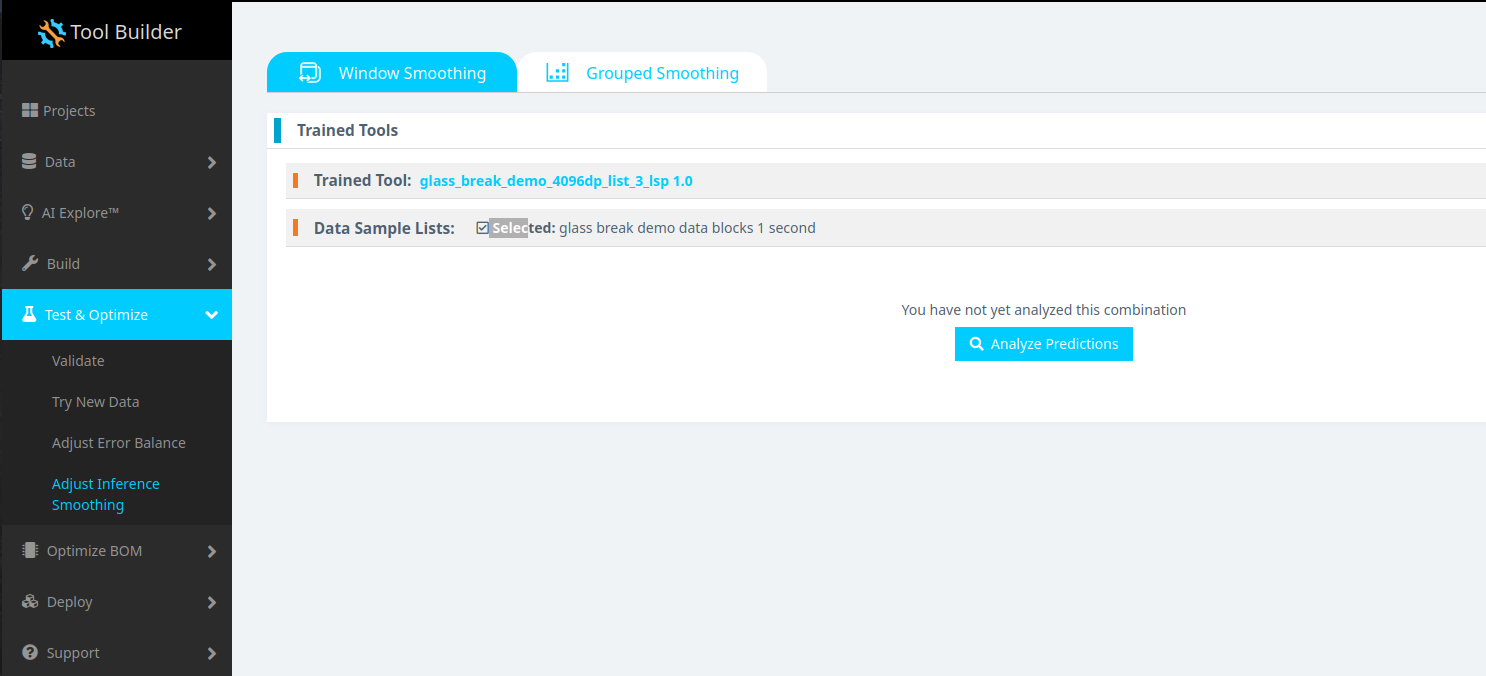

Accessing the Test and Optimize Section

-

Navigate to Test & Optimize > Adjust Inference Smoothing from the left navigation pane.

-

Select the Trained Tool (your trained model) and the Data Sample List (the dataset you wish to test with).

-

Click Analyze Predictions.

This sets up the graphical user interface (GUI) for applying smoothing techniques to the model’s predictions.

Understanding Inference Smoothing Techniques

There are two types of inference smoothing you can apply:

-

Window Smoothing

Applies smoothing over a fixed number of predictions, allowing you to reduce the impact of random fluctuations. -

Grouped Smoothing

Applies smoothing by grouping predictions based on metadata (such as source file names), making it useful for context-aware predictions.

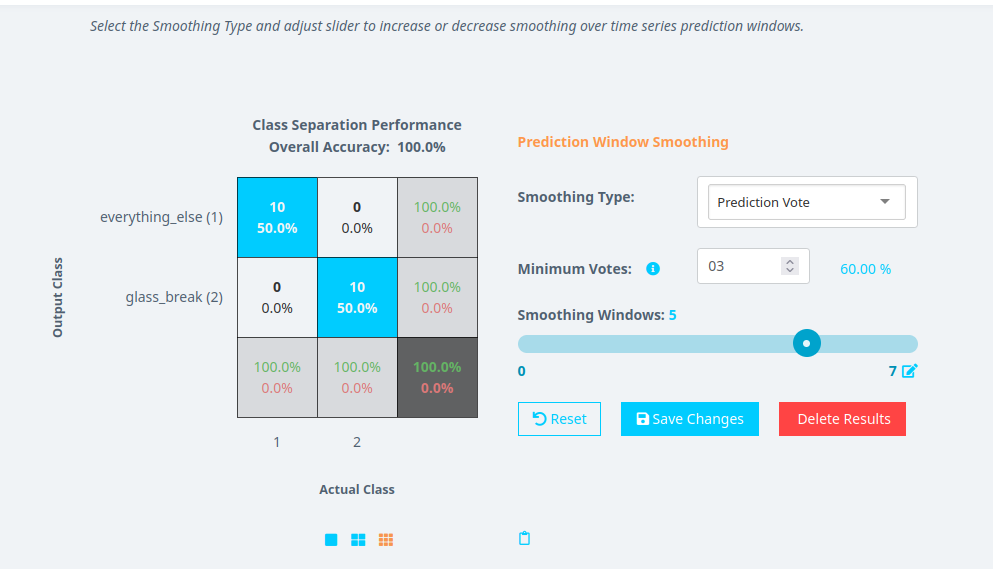

Applying Window Smoothing

-

Choose the Window Smoothing tab.

-

From the dropdown menu, select the Smoothing Type:

- Prediction Vote: Uses the most frequent predicted class (e.g.,

glass_breakoreverything_else) within the smoothing window. - Class Score: Averages the class score (a probability between 0 and 1) for each prediction.

- Prediction Vote: Uses the most frequent predicted class (e.g.,

-

If using Prediction Vote, configure the following:

- Minimum Vote (Optional): Define the minimum number of votes required for a class to be selected. This is only applicable for Prediction Vote mode.

- Smoothing Window: Set the number of consecutive predictions over which the mode (most frequent value) will be calculated.

- Example: For a glass break detection system, if the smoothing window is set to

5and the Minimum Vote is3, the model will classify the segment asglass_breakif theglass_breakclass appears at least 3 out of 5 times.

- Example: For a glass break detection system, if the smoothing window is set to

-

If using Class Score:

- Only set the Smoothing Window.

- Since this method uses probabilistic scores (between 0 and 1), you do not need to set a minimum vote value.

-

Click Save Changes to save your settings.

Saving changes stores the model with the smoothing configuration on the server, making it available for future testing and embedded export.

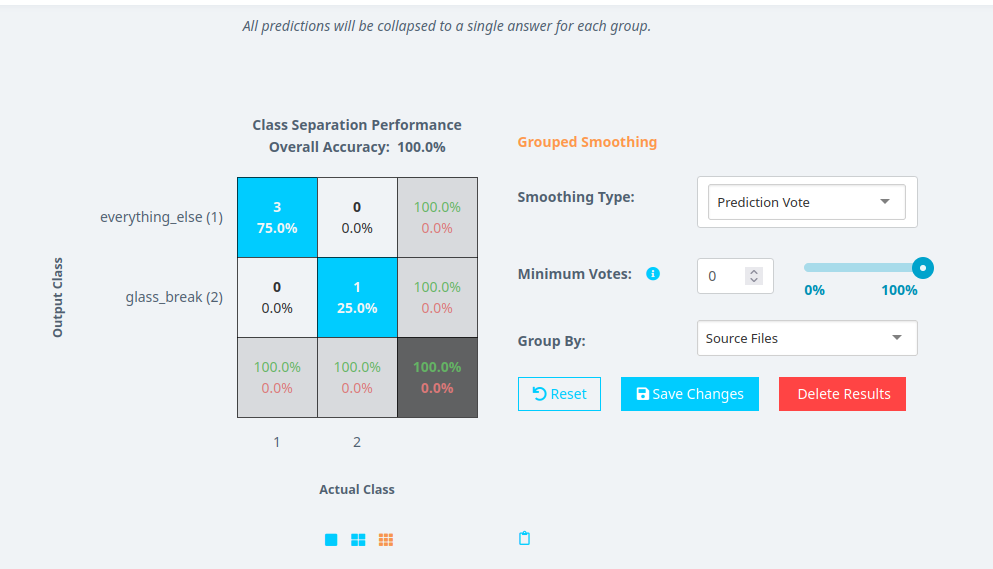

Applying Grouped Smoothing

-

Choose the Grouped Smoothing tab.

-

Select the Smoothing Type:

- Prediction Vote: Averages the class predicted within each group.

- Class Score: Averages the probabilistic class score within each group.

-

Set the Group By option:

- You can group predictions by metadata (e.g., Source File, Date, or any other metadata field).

- This allows the model to apply smoothing within each metadata group separately.

-

If using Prediction Vote:

- Set the Minimum Votes value (optional).

- If set to

0, the model will use a majority vote approach. - If set to a value greater than

0, the model will require that many votes for a class to be selected.

- If set to

- Example: If Group By is set to Source File and Minimum Votes is

0, the model will perform majority vote smoothing within each file.

- Set the Minimum Votes value (optional).

-

If using Class Score:

- Set the Group By metadata field.

- No need to set a minimum vote value because this method uses probabilistic scores.

Example: How Inference Smoothing Works

Scenario:

You have a model that predicts two classes: glass_break and everything_else.

Example 1: Window Smoothing with Prediction Vote

- Smoothing Type: Prediction Vote

- Smoothing Window: 5

- Minimum Vote: 3

| Prediction Sequence | Smoothed Result |

|---|---|

| glass_break, glass_break, everything_else, glass_break, glass_break | glass_break |

| everything_else, everything_else, glass_break, glass_break, glass_break | glass_break |

Example 2: Grouped Smoothing with Class Score

- Smoothing Type: Class Score

- Group By: Source File

- Each file is treated as a separate group for averaging class scores.

| Source File | Predictions (Raw) | Smoothed Result |

|---|---|---|

| File1 | 0.7 (glass_break), 0.6 (glass_break), 0.4 (everything_else) | glass_break (average score = 0.65) |

| File2 | 0.3 (glass_break), 0.2 (everything_else), 0.8 (glass_break) | glass_break (average score = 0.43) |

When to Use Each Smoothing Technique

- Use Window Smoothing when you want a rolling average over a fixed number of predictions, suitable for live inference scenarios.

- Use Grouped Smoothing when you want to smooth predictions based on contextual metadata (e.g., file names, recording session).

- Experiment with different smoothing window sizes to find the right balance between responsiveness and stability.

- For Prediction Vote, ensure the Minimum Vote is set to a value that avoids false positives.

- For Grouped Smoothing, choose metadata fields that align with your testing conditions (e.g., source file, recording date).

Saving and Exporting the Optimized Model

After adjusting and applying smoothing, click Save Changes.

- The model is saved with the new smoothing settings on the server.

- These settings will also be applied when you export the model for embedded deployment.